Cybercriminals Exploit AI Hype to Spread Ransomware and Malware

Artificial Intelligence (AI) has captivated the world with its rapid progress and transformative capabilities. From language models and image generators to voice assistants and predictive tools, AI is now embedded in our daily lives. But while companies and consumers are embracing this revolution, cybercriminals are exploiting the AI hype for far more sinister purposes.

Increasingly, attackers are using the growing interest in AI as a trap spreading malware and ransomware under the guise of powerful, new AI tools. What was once a curiosity-driven search for the latest technology has now become a risky path for unsuspecting users.

The Bait: Fake AI Tools and Platforms

One of the most common methods attackers use is creating fake AI websites and downloadable apps that mimic popular tools like ChatGPT, Midjourney, or Google Bard. These platforms often promise advanced AI features or free access to premium tools. The user, unaware of the scam, downloads what appears to be a genuine app. In reality, they’re installing malicious software.

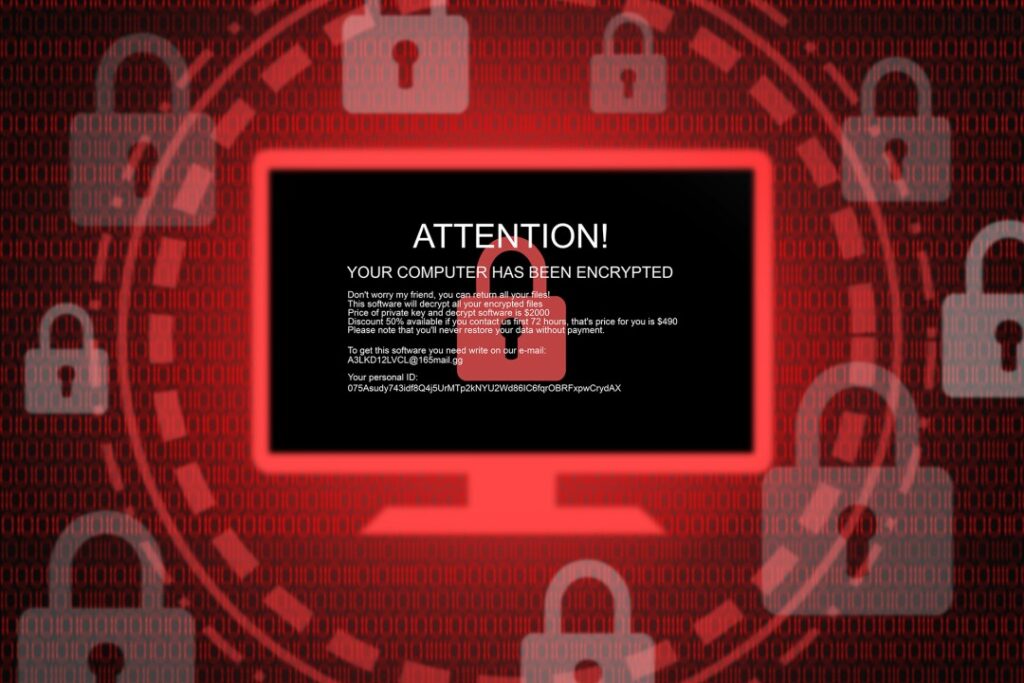

Sometimes the trap is even more sophisticated. Hackers develop polished interfaces that appear to work – offering chatbot replies or image generation – while silently deploying ransomware or spyware in the background. Victims don’t suspect anything until their system becomes encrypted or their sensitive data is stolen.

In some cases, phishing emails are used to lure victims with offers of AI job assistants, free productivity bots, or beta access to “exclusive” tools. Others are tricked via search engine manipulation, where fake AI-related sites are optimized to appear high in Google results. The strategy relies on people’s eagerness to try trending technologies quickly without verifying the source.

Malware Behind the Mask

What makes this trend particularly dangerous is the variety and potency of the malware being distributed. Ransomware is a key player: it encrypts all files on a victim’s machine and demands a ransom payment, typically in cryptocurrency. Victims often only realize they’ve been infected when it’s too late when their documents, photos, and work files are locked away.

Another major threat is infostealers malicious programs that quietly collect passwords, credit card information, crypto wallet data, and browser activity. These are commonly disguised within apps posing as AI chat tools or productivity enhancers. Remote Access Trojans (RATs) are also on the rise. These programs give cybercriminals full control over a device, often installed through fake AI assistants or “smart” desktop tools.

These attacks are not always immediate. Some malware waits days or weeks before activating, making it even harder to trace the source of infection.

Why This Works So Well

The effectiveness of this strategy lies in human psychology. AI is hot, fast-moving, and competitive. Everyone from students to CEOs—is trying to stay ahead of the curve, which means there’s pressure to adopt new tools quickly. This urgency makes people more likely to overlook red flags or bypass standard security precautions.

Additionally, the branding around AI carries an aura of legitimacy and innovation. Many people assume a tool associated with AI must be safe and useful exactly the assumption hackers are relying on. Combined with slick designs and clever social engineering tactics, the scams are incredibly convincing.

Real-World Cases

There have already been high-profile incidents where fake AI tools have caused real damage. A ChatGPT desktop application distributed through forums and YouTube tutorials was recently found to contain the RedLine Stealer, a powerful malware that extracts sensitive user data. In another case, cloned versions of AI art platforms circulated online, infecting users with keyloggers and backdoors. Even professional networks like LinkedIn saw an influx of fake AI job tools targeting job seekers.

These examples underline the increasing sophistication of attackers and their growing focus on AI as a disguise.

How to Stay Protected

While the threat is serious, the good news is that simple precautions can greatly reduce your risk. First and foremost, always download AI tools from official websites or verified app stores. Be skeptical of “too good to be true” offers, especially those found on lesser-known sites or forums. Avoid pirated versions of popular tools they’re a common carrier of embedded malware.

Keep your operating system, antivirus, and browser up to date. Many malware variants rely on exploiting outdated software. Also, consider using browser extensions or security plugins that flag suspicious sites. For organizations, implementing strong email filters, employee awareness training, and sandbox environments for testing new software can offer essential protection.

Most importantly, don’t let hype override caution. The promise of AI is real but so are the risks of blindly following the trend.

Final Thoughts

The AI boom is not just attracting innovators and enthusiasts it’s drawing in cybercriminals looking to take advantage of the excitement. As fake tools proliferate and malware grows more deceptive, understanding these risks is the first step toward defense.

Stay informed. Stay cautious. And let your curiosity for AI be guided by smart digital hygiene.